In the public’s collective consciousness, artificial intelligence or AI is personified by several characters from the world of science fiction, such as Star Trek’s Commander Data, Kryten from Red Dwarf, Ash from the first Alien movie in 1979, and many others. However, in real life, this kind of self-aware automaton simply doesn’t exist. The AI that hits the newspapers with increasing regularity today usually looks more like your average computer.

Google DeepMind

Of course, AI is scalable. The game development engine Unity 3D can handle AI behavior models (or state machines) with an inexpensive laptop while the kind of machines that approach real intelligence, such as Google’s DeepMind, can take up entire building floors. It’s this adaptability that has made AI almost ubiquitous in the tech community – and its possible applications continue to grow.

Source: Pexels

While Elon Musk and AI’s detractors might sometimes look at the concept through the lens of Terminator 2, many uses of artificial intelligence are quite prosaic – image cropping, writing songs, and mapping diseases. The company Beth.bet has even created an algorithmic tool to beat bookmakers in horse racing, using past results and other data points to pick race winners for its users.

For all its modern associations, though, AI is not a new concept, both in science fiction and in the real world.

Turing Test

Forbes claims that the history of AI can be traced all the way back to 14th century Catalonia but intelligent machines as we’ve come to know them arguably began with Alan Turing and his now-famous Turing Test (1950), which measures a computer’s ability to think like a human. Of course, the science fiction writer Isaac Asimov did a great deal to popularise AI eight years earlier with his novel I, Robot and its Three Laws of Robotics.

Source: Pexels

AI requires a balance of hardware and programming. The Perceptron, one of the first machines capable of learning, existed both as a program on the IBM 704 computer and as a large machine consisting of electric motors and photocells. It was designed solely to recognize images yet the Navy expected the Perceptron to be conscious and capable of self-reproduction. Our high expectations for AI clearly began back in 1958.

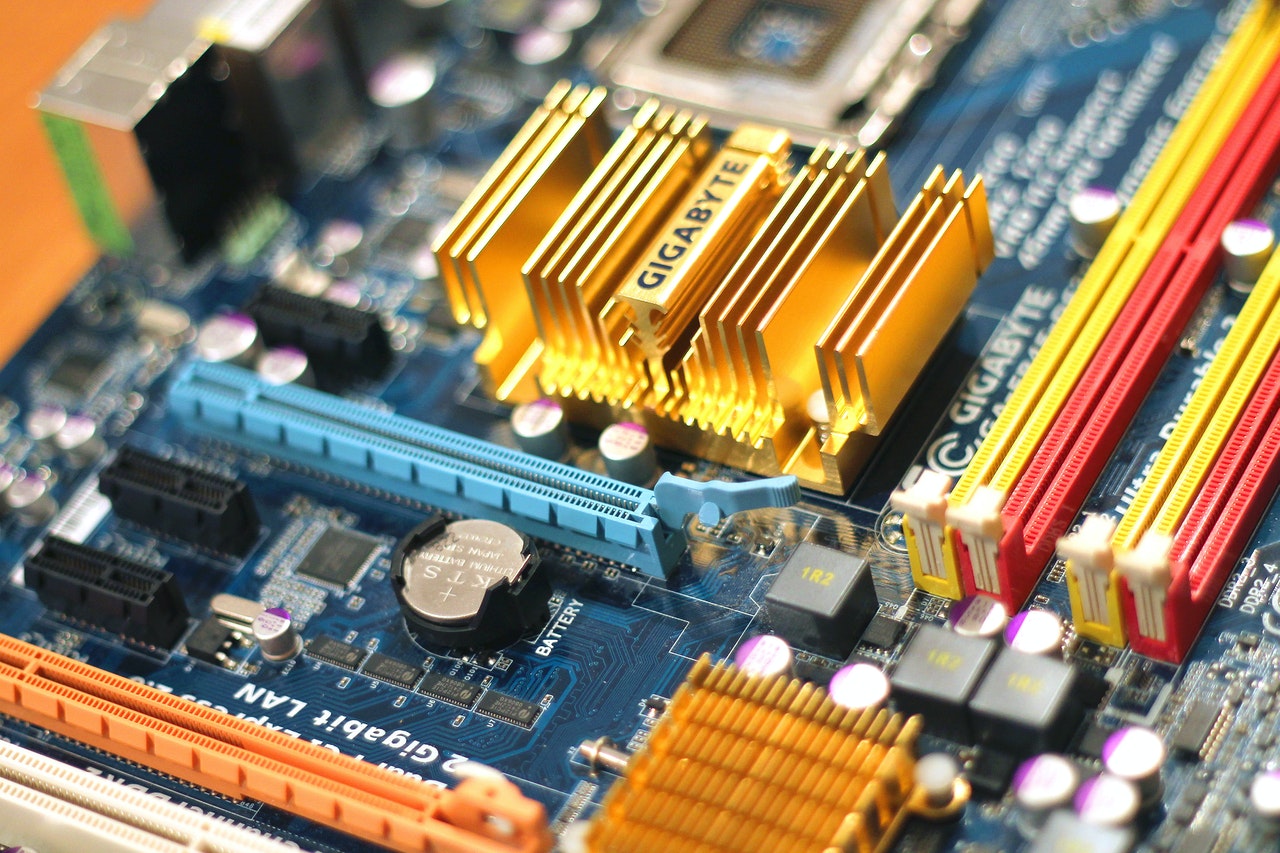

AI Chips

Today, AI utilizes a complex network of CPUs and GPUs. The former, a Central Processing Unit, has an obvious use as the ‘brain’ of the machine but GPUs or Graphics Processing Units may seem redundant. However, as evidenced by their use in cryptocurrency mining, modern GPUs are adept at breaking down and running several tasks simultaneously, giving the machine more bandwidth for its calculations.

The creation of AI chips has muddied the waters a little, as far as hardware is concerned. Lots of ‘smart’ devices will use AI chips instead of CPUs and GPUs, performing all necessary tasks without resorting to bulky hardware or cloud computing. This kind of miniaturization has proven extremely important to all technological advancements throughout history, from space flight to mobile phones, and places AI in a critical position in its ongoing development.

It’ll certainly be interesting to see what AI will be capable of in the future.